The AI Chip War Heats Up: OpenAI, Microsoft, and Apple’s Secret Silicon Projects

The AI arms race is no longer just about algorithms—it’s about who controls the silicon. As generative AI models balloon in size and complexity, the cost and speed of training them have become critical choke points. At the heart of this bottleneck lies the dominance of NVIDIA’s GPUs and Google’s TPUs, which currently power the majority of today’s AI workloads. But that’s changing—fast.

Now, tech giants like OpenAI, Microsoft, and Apple are moving quietly but aggressively into custom chip development. These companies aren’t just looking to save money—they’re trying to reshape the very foundations of AI infrastructure. Custom silicon gives them a strategic edge: better performance per watt, lower latency, reduced reliance on competitors, and long-term cost control.

In this blog, we’ll explore how these companies are building their own chips, why it’s shaking up the global semiconductor landscape, and what this means for the future of AI economics. From OpenAI’s rumored “Project Tigris” to Apple’s edge-optimized Neural Engine and Microsoft’s enterprise-grade Maia chip, the AI chip war is heating up—and the stakes are enormous.

1. The Cost of Dependence: Why Everyone’s Moving Away from NVIDIA

For over a decade, NVIDIA has been the undisputed king of AI infrastructure. Its CUDA platform, combined with the raw power of its H100 and A100 GPUs, has made it the go-to vendor for anyone training large language models or running AI inference at scale. But success has a price—literally.

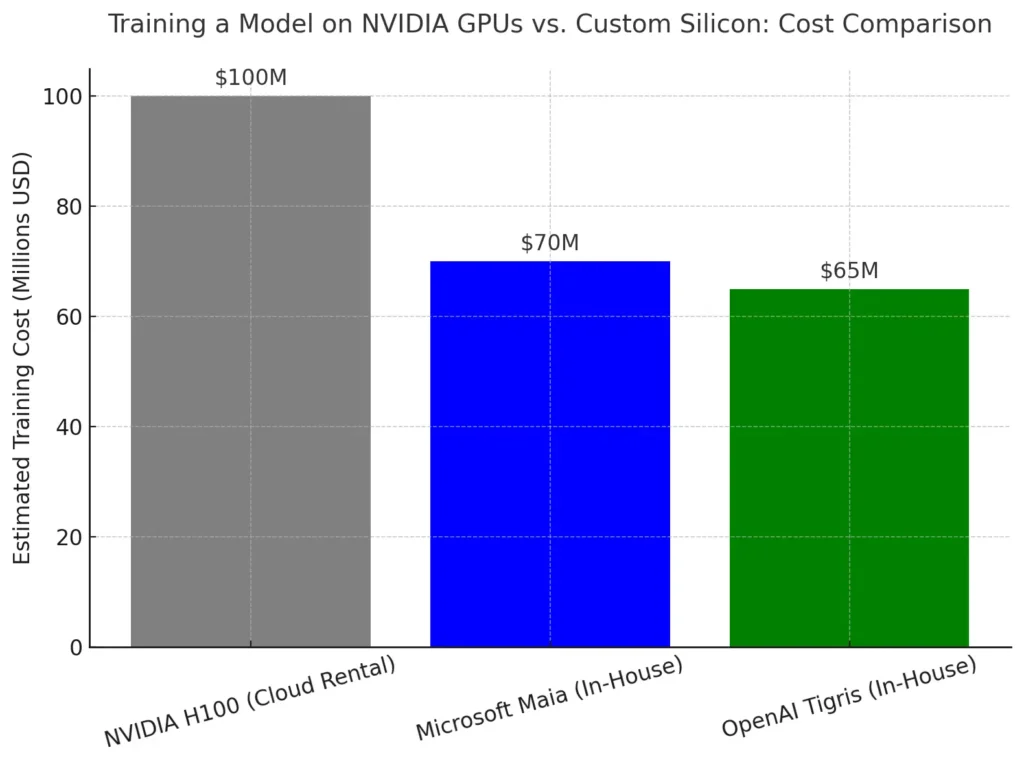

The cost of training a state-of-the-art AI model like GPT-4 can exceed $100 million, with much of that expense tied directly to NVIDIA’s premium hardware. Renting an H100 on the cloud can cost $2 to $4 per hour, depending on availability and location. For startups and hyperscalers alike, these economics are brutal.

But the real pain point is supply constraint. In 2023–2024, companies from OpenAI to Meta faced serious GPU shortages, slowing down development cycles and escalating infrastructure costs. This chokepoint made one thing clear: relying entirely on an external vendor—especially one that also supplies your competitors—is a long-term strategic risk.

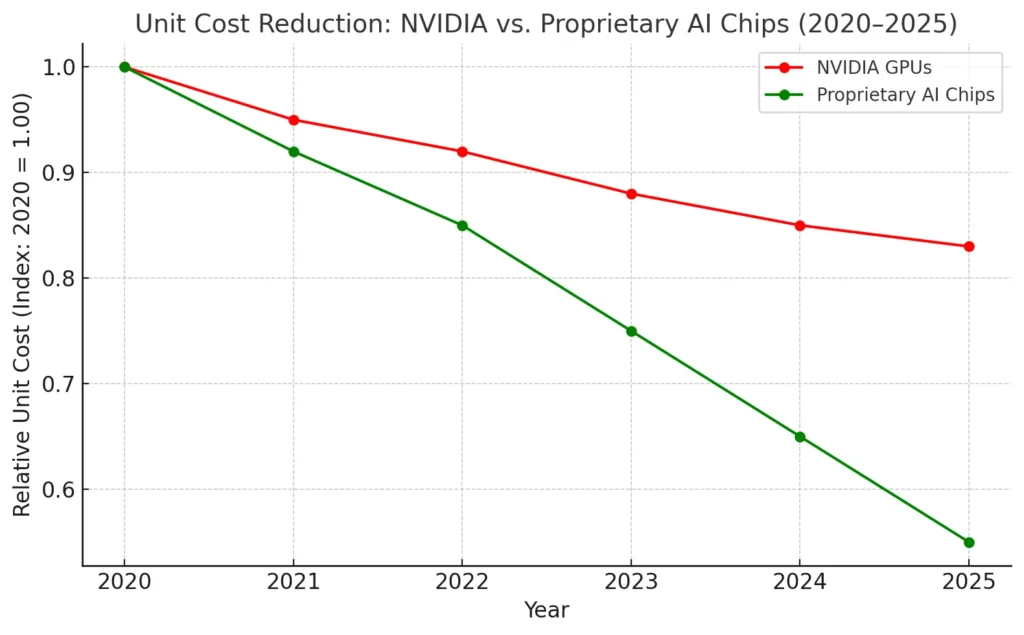

As a result, hyperscalers began seeking hardware independence. By designing their own AI accelerators, they aim to slash costs, optimize performance for specific workloads (e.g., transformers, CNNs, diffusion models), and build vertically integrated AI stacks. The result? A slow but seismic shift away from NVIDIA—and toward in-house silicon that powers everything from training to deployment.

This shift isn’t just about technology—it’s about economic survival in the AI-first era.

The comparative bar chart below illustrates the estimated cost of training a model like GPT-3 or GPT-4 using NVIDIA H100s versus custom in-house silicon such as Microsoft’s Maia and OpenAI’s rumored Tigris. It shows a potential 30–35% cost savings with proprietary chips, reinforcing why hyperscalers are racing to build their own AI hardware.

2. OpenAI’s Silicon Ambitions: Project Tigris and Beyond

OpenAI’s models are among the most compute-hungry in the world. GPT-3 had 175 billion parameters; GPT-4, according to leaks, could be using over a trillion parameters in its Mixture-of-Experts setup. Each generation demands orders of magnitude more compute, and with it, more hardware budget.

Enter Project Tigris—a rumored custom silicon initiative aimed at reducing OpenAI’s dependence on NVIDIA and cloud-based GPUs. While OpenAI has made no official announcement, several signs point to its serious commitment to custom hardware:

- Hiring trends show an influx of chip designers, compiler engineers, and silicon validation experts.

- OpenAI has reportedly discussed partnering with chip startups and possibly working with RISC-V or other modular architectures.

- Close integration with Microsoft’s Azure AI infrastructure (where Maia chips are deployed) provides the perfect testbed for hybrid training setups.

The motivation is clear: OpenAI reportedly spends tens of millions monthly on cloud GPU usage. By shifting key workloads to proprietary silicon, it could cut training and inference costs by up to 30%, while improving throughput and model responsiveness.

There’s also a strategic angle. OpenAI’s mission to build artificial general intelligence (AGI) requires exclusive access to compute at scale. In-house chips would offer that control—ensuring OpenAI isn’t gated by supply limits, cloud pricing wars, or competitors using the same hardware.

If Project Tigris becomes reality, it could trigger a ripple effect across the entire AI industry, pressuring even smaller model developers to rethink their hardware stack—or risk falling behind in a world where custom chips mean faster models, lower costs, and strategic control.

3. Microsoft’s Dual-Track Strategy: Maia, Cobalt, and Azure’s AI Edge

While OpenAI captures headlines with its AI models, it’s Microsoft quietly building the infrastructure backbone that powers them. With billions invested in data centers, model training pipelines, and now custom silicon, Microsoft is executing a two-pronged chip strategy designed to reduce dependence on NVIDIA and cement Azure as the default platform for enterprise AI.

At the core of this strategy are two chips:

- Maia: Microsoft’s in-house AI accelerator, optimized for training and inference workloads.

- Cobalt: A general-purpose ARM-based chip designed to improve power efficiency and performance for Azure’s broader cloud services.

🔧 From GPU Tenant to Silicon Owner

Maia chips are built specifically to run large-scale models like GPT-4, Copilot, and Bing Chat more efficiently than traditional GPUs. Microsoft claims Maia can offer comparable performance to NVIDIA’s H100 but at a lower cost due to tight optimization with its AI stack and vertical integration across software layers.

Meanwhile, Cobalt chips enhance Azure’s baseline cloud performance, reducing Microsoft’s need for Intel and AMD CPUs in core operations. This is not just about AI—it’s about total cost control across the datacenter.

💰 Economic Leverage at Hyperscale

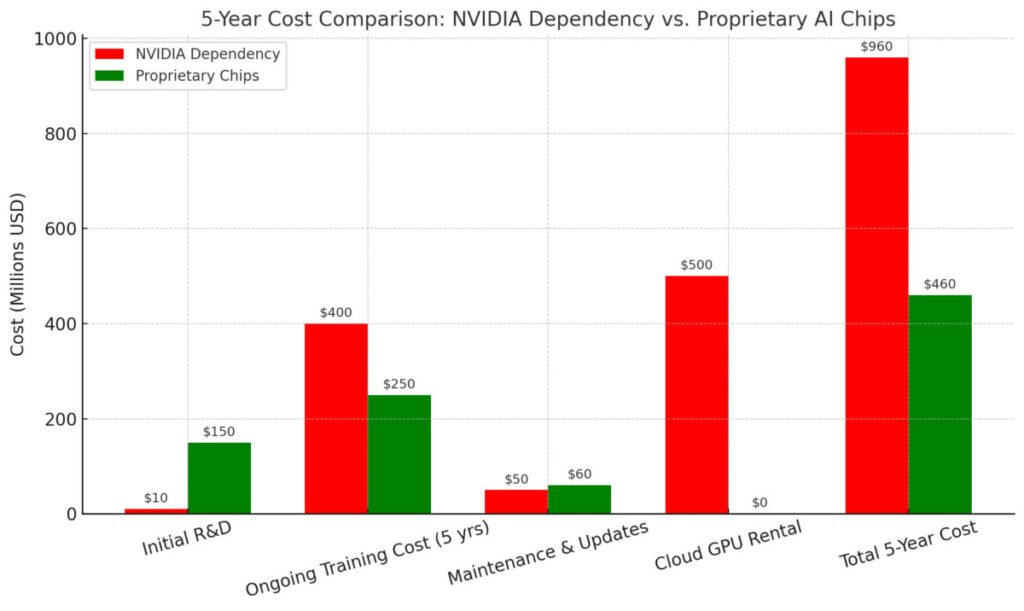

With custom chips, Microsoft avoids the markup on merchant silicon, which can represent 30–60% of total deployment costs. At scale, this translates into hundreds of millions in annual savings—while also reducing exposure to chip supply shortages that plagued the AI industry throughout 2023 and 2024.

Microsoft’s ability to co-design silicon, hardware, and software also enables it to deliver more consistent AI performance for enterprise customers, especially those using Azure OpenAI services.

🧱 Building a Competitive Moat

Unlike Apple’s edge-focused Neural Engine, Microsoft’s Maia is all about cloud-scale dominance. Every layer—from chip to platform to AI service—is owned or tightly controlled. This vertical stack forms a moat that’s difficult for rivals to cross.

And there’s another strategic layer: Microsoft hosts OpenAI’s most advanced models. By providing the infrastructure and now the silicon, Microsoft effectively inserts itself into the core loop of AGI development—even if it doesn’t own the models outright.

4. Apple’s Silent Power Play: A17 Pro, Neural Engine, and the Future of On-Device AI

While the AI spotlight often shines on cloud behemoths like Microsoft and OpenAI, Apple is waging its own AI chip war—quietly, efficiently, and at the edge. With its A-series chips and the Neural Engine embedded in iPhones, iPads, and the new Vision Pro headset, Apple is rewriting the rules of AI deployment by focusing on local inference rather than cloud-based compute.

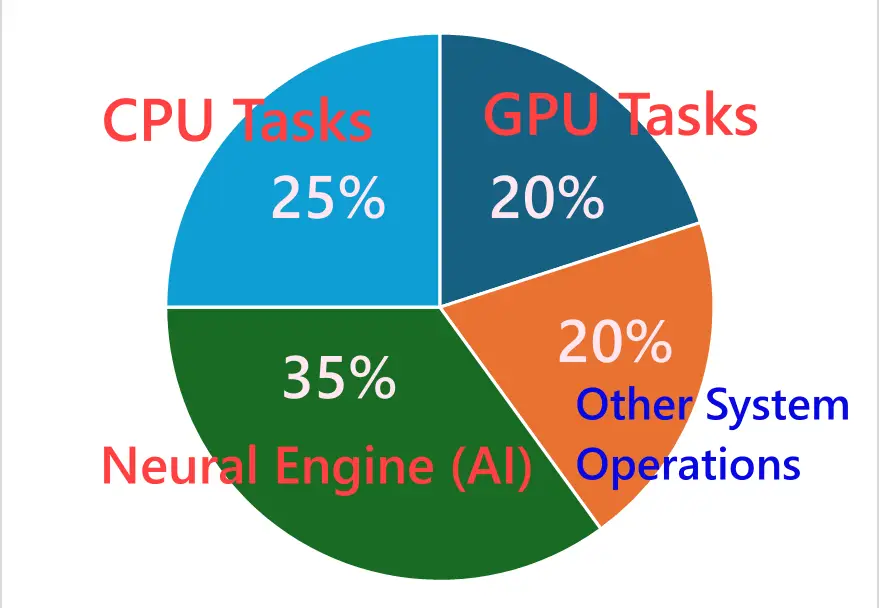

⚙️ The Neural Engine: AI That Lives in Your Pocket

First introduced in the A11 chip in 2017, Apple’s Neural Engine has steadily evolved into a powerful accelerator capable of running billions of operations per second. The latest A17 Pro chip, built on TSMC’s 3nm node, features an enhanced Neural Engine that’s 2x faster than its predecessor—capable of handling everything from on-device image generation to real-time language translation.

This isn’t just performance for performance’s sake. Apple’s edge-first approach to AI serves a strategic trifecta:

- Privacy: Processing data locally eliminates cloud transmission, bolstering Apple’s privacy-first brand.

- Latency: On-device inference drastically reduces response times for AI tasks.

- Battery Efficiency: Custom silicon delivers more compute per watt, critical for mobile devices.

💰 The Economic Upside of Edge AI

Unlike hyperscalers that incur massive CapEx and energy costs to power server farms, Apple’s investment in AI silicon is embedded in devices already sold to consumers. This allows Apple to amortize AI R&D across hardware profits, creating a self-sustaining economic model.

Every iPhone becomes an AI inference node, enabling scalable deployment of features like personalized recommendations, real-time dictation, health monitoring, and potentially on-device generative AI.

It also sets Apple apart in the AR/VR space. The Vision Pro, powered by both the M2 chip and a dedicated R1 chip for sensor fusion, showcases how Apple is extending its AI chip capabilities into spatial computing—laying the foundation for the next hardware platform shift.

🧠 Strategic Isolation from the GPU Arms Race

Perhaps Apple’s greatest advantage is its strategic independence from NVIDIA. By investing early in its own silicon, Apple has avoided the bottlenecks and volatility of the cloud GPU market entirely. It doesn’t need to compete for H100s or adapt to CUDA—because it’s already built a custom stack optimized for its own use cases.

While Apple may not train trillion-parameter models, its focus is just as significant: making AI feel invisible, intuitive, and private. In doing so, it’s building a moat not in the cloud, but in the palm of your hand.

5. Economic Ripples: Impact on NVIDIA, TSMC, and the Supply Chain

The surge in custom AI silicon is doing more than just reshaping tech giants’ internal strategies—it’s triggering profound economic ripple effects across the global semiconductor ecosystem. The traditional dominance of NVIDIA and its GPU-centric supply chain is now under siege, and the consequences are being felt from Silicon Valley to Hsinchu.

🏆 NVIDIA: From Monopoly to Margin Pressure

For years, NVIDIA enjoyed a near-monopoly on AI compute. Its CUDA ecosystem, software maturity, and GPU performance made it the default choice for model training and inference. That dominance translated into gross margins above 70% and record-breaking earnings, especially during the generative AI boom of 2023–2024.

But custom chips from Microsoft, Apple, Amazon, Meta, and even OpenAI threaten to erode that dominance. Every Maia, Trainium, or Tigris chip deployed represents one fewer H100 sold or rented. This could lead to:

- Reduced pricing power

- Slower enterprise upgrade cycles

- Lower total addressable market growth

While NVIDIA will likely maintain a stronghold in cutting-edge research and startups, its days of undisputed hyperscaler control are numbered.

🧩 TSMC: The Quiet Winner—For Now

As the manufacturing partner behind nearly all major custom AI chips—including Apple’s A-series, Microsoft’s Maia, Amazon’s Trainium, and even NVIDIA’s H100—TSMC is, paradoxically, benefiting from the AI chip war.

TSMC’s role is shifting from serving a few dominant GPU vendors to balancing a portfolio of dozens of AI hardware clients, increasing its geopolitical and economic importance.

However, this shift introduces new challenges:

- Capacity management becomes more complex

- Client priority conflicts may arise if demand exceeds fab availability

- Dependency on advanced packaging and 3nm/2nm nodes will pressure capital and innovation cycles

🌍 Global Supply Chain: Fragmentation and Nationalism

The move toward custom silicon has also accelerated geopolitical diversification. Countries now view AI chip supply chains as national security assets. This is leading to:

- Localized fabs and foundry incentives (e.g., U.S. CHIPS Act, EU Chips Act, China’s SMIC ramp-up)

- Export controls on EDA tools and advanced lithography (targeting China, Russia, others)

- New alliances between cloud vendors and fabless chip design firms to build independent paths

In this fragmented landscape, supply chain resilience and fab proximity may become just as critical as performance and cost.

Bottom Line:

As hyperscalers embrace custom silicon, NVIDIA may lose its grip, but the semiconductor game expands. TSMC wins in the short term—but competition, national policies, and capacity constraints loom. This is no longer just a battle of chips; it’s a battle of who controls the AI future’s manufacturing and logistics stack.

6. The Rise of In-House AI Silicon: A Competitive Necessity

What was once a moonshot for elite chipmakers has now become a strategic mandate for any tech giant serious about AI. The age of licensing generic silicon is giving way to an era of custom-built AI accelerators, designed in-house, optimized for proprietary workloads, and tightly integrated with vertical software stacks. For Microsoft, Apple, Google, Amazon, Meta, and even OpenAI, building your own AI chip is no longer optional—it’s the foundation of AI-era dominance.

🧱 AI Infrastructure Is the New Competitive Moat

In previous tech eras, competitive moats were built on search engines, social networks, or operating systems. Today, the moat is infrastructure—and that begins at the silicon layer.

Owning the chip means:

- Tailored performance for your models (transformers, vision, multimodal, etc.)

- Full-stack optimization (hardware ↔ compiler ↔ runtime)

- Lower unit economics for AI services (Copilot, ChatGPT, Vision Pro, etc.)

- Independence from rivals in the GPU supply chain

Vertical integration enables these companies to customize memory access patterns, cache hierarchies, and power consumption curves around their core AI tasks—an optimization loop that general-purpose GPUs simply can’t match.

💡 Not Just for Big Tech

While the spotlight shines on hyperscalers, even smaller AI firms and sovereign nations are exploring custom silicon through:

- Chiplet-based architectures (modular, scalable)

- Open-source hardware stacks (like RISC-V)

- Design-as-a-service firms (e.g., Tenstorrent, SiFive, SemiFive)

This democratization of chip design mirrors the rise of open-source software—suggesting that the next AI unicorns may not only write better models, but also run them on their own hardware.

📉 The Cost of Falling Behind

Companies that continue to rely solely on off-the-shelf GPUs will face:

- Escalating costs

- Limited supply access

- Performance caps

- And most importantly—loss of control over their AI product roadmap

As competition accelerates, these limitations become existential. In this new AI economy, those who control the chips control the future.

7. Risks and Roadblocks in Custom Chip Design

The promise of proprietary AI chips is compelling—cost control, performance tuning, and strategic independence—but the road to silicon sovereignty is littered with obstacles. Designing, validating, and deploying custom chips is a high-stakes endeavor with massive technical, financial, and operational risks.

🧪 R&D Complexity and Time-to-Market Delays

Developing a custom chip takes 2–3 years from concept to deployment. It involves:

- Architecture planning

- IP licensing

- EDA (Electronic Design Automation) tools

- Verification, tape-out, and manufacturing

One missed design spec or fab delay can derail product timelines, creating costly setbacks. Even giants like Intel (with Nervana) and Google (with early TPU revisions) faced major setbacks in custom AI hardware initiatives.

For startups or companies new to silicon, these challenges can be existential.

💸 Enormous Upfront Investment

Custom silicon isn’t cheap. A single advanced chip project can exceed $100 million in design and validation costs—before a single unit ships. This makes it viable only for:

- Hyperscalers with deep cash reserves

- Companies that can amortize chip costs across large platforms (Azure, iPhone, etc.)

- Nationally funded or strategically backed ventures

Those without deep pockets must partner carefully or consider semi-custom or chiplet-based alternatives.

🧰 Ecosystem Maturity and Toolchain Limitations

Custom chips need software support to function. That includes:

- Compilers and drivers

- ML frameworks like TensorFlow or PyTorch

- Deployment toolchains (e.g., ONNX, Triton, TVM)

If these don’t support your hardware—or perform poorly—your entire effort may underdeliver. NVIDIA’s software moat (CUDA, cuDNN, TensorRT) remains a formidable advantage that custom chips must overcome.

🌐 Supply Chain & Geopolitical Vulnerabilities

Even if the design is ready, the chip still needs to be manufactured. That introduces exposure to:

- Foundry availability (TSMC, Samsung, Intel)

- Advanced packaging bottlenecks

- Export controls on EDA tools, IP cores, or lithography equipment

China, for example, is heavily investing in custom silicon, but faces sanctions that limit access to tools and fabrication nodes below 14nm.

⚠️ The High-Risk, High-Reward Nature of Custom Silicon

Custom AI chip design offers massive economic and competitive leverage, but it’s a game only few can afford to play—and even fewer can win. Those that succeed, however, gain a foundational advantage in cost, speed, and long-term product control.

It’s not just a technical investment—it’s a strategic bet on owning the future of compute.

Here is the 5-year cost comparison chart between relying on NVIDIA GPUs versus investing in proprietary AI chips. It shows that while custom chips require a higher initial R&D spend, they offer significant long-term savings by eliminating cloud GPU rentals and reducing training costs.

8. The Strategic Future: AI Infrastructure as a Moat

As generative AI reshapes every sector—from search and productivity to healthcare and autonomous vehicles—one truth is becoming increasingly clear: those who own the infrastructure will control the future. In this new era, infrastructure doesn’t just mean servers or data—it starts at the silicon layer, and extends up through models, APIs, and platforms.

🧱 Owning the Full Stack

Vertical integration gives companies like Microsoft, Apple, and potentially OpenAI a multi-layered strategic moat:

- Custom Chips (Maia, A17 Pro, Tigris): Hardware tailored to their own models and systems.

- Optimized Software & Frameworks: Compilers, toolchains, and orchestration built around the chip.

- Proprietary Models (GPT, Copilot, Siri LLM): Algorithms designed to fully leverage in-house hardware.

- Platform Integration: Seamless deployment across Azure, iOS, Office, and other environments.

- Service Lock-in: AI features embedded directly into enterprise and consumer workflows.

This architecture results in cost savings, better performance, user retention, and reduced dependence on third parties like NVIDIA, Intel, or AWS. It also allows for faster innovation cycles, since companies can iterate across the stack without external constraints.

💸 Infrastructure as Leverage

Owning infrastructure gives companies the leverage to:

- Control pricing and margins at every layer

- Dictate deployment priorities (i.e., allocate compute to the most strategic projects)

- Outperform competitors in latency, privacy, and customization

It’s no coincidence that the companies leading in AI right now—Apple, Microsoft, Amazon, Google—all own a growing share of their compute stack. The long-term vision isn’t just to build smarter models—it’s to ensure they run faster, cheaper, and more securely on hardware you control.

🌍 A New Kind of Arms Race

The AI chip war isn’t just a product strategy—it’s a national strategy, a competitive edge, and a line of defense. In the same way oil and electricity shaped the geopolitical landscape of the 20th century, AI infrastructure will shape the power structures of the 21st.

For companies, this means a future where infrastructure is not outsourced—it’s internalized, optimized, and weaponized as a core part of the product strategy.

9. Conclusion: The Quiet Race That Will Decide the AI Century

While headlines fixate on flashy product demos, the real AI race is happening behind the scenes—in silicon labs, compiler stacks, and chip foundries. The future of artificial intelligence won’t be decided just by who builds the smartest models, but by who owns the infrastructure that powers them.

OpenAI, Microsoft, and Apple aren’t just building AI features—they’re engineering a structural advantage at the chip level. They’re spending billions not to compete on GPU rentals, but to control every layer of the AI value chain. The payoff? Faster inference, lower costs, greater privacy, and the ability to scale innovation without waiting on NVIDIA or any third party.

This is no longer a question of if companies need custom AI silicon—it’s a question of when and how fast. Those who lag risk becoming dependent on external vendors, constrained by compute costs, and outpaced by vertically integrated rivals.

As AI continues to eat software, and software continues to eat the world, silicon becomes the new substrate of power. The companies that master it today won’t just lead in AI tomorrow—they’ll define the technological landscape for decades to come.

The chip war has gone silent. But it’s more decisive than ever.

🔗 [Reference]

- NVIDIA Financial Summary (FY2023)

NVIDIA Corporation, 2023

https://investor.nvidia.com - Microsoft Unveils Custom Maia AI Chip for Azure

The Verge, 2023

https://www.theverge.com/2023/11/15/microsoft-maia-ai-chip - Apple A17 Pro and Neural Engine Overview

AnandTech, 2023

https://www.anandtech.com/show/20047/apple-a17-pro-architecture - OpenAI Reportedly Developing Custom AI Chip (Project Tigris)

Reuters, 2023

https://www.reuters.com/technology/openai-explores-making-its-own-ai-chips-2023-10-06 - Why Everyone’s Building AI Chips Now

MIT Technology Review, 2024

https://www.technologyreview.com/2024/01/ai-chip-wars-custom-silicon - TSMC’s Role in Custom AI Chip Manufacturing

Bloomberg, 2023

https://www.bloomberg.com/news/articles/2023-10-17/tsmc-benefits-from-rising-ai-chip-demand - Inside Microsoft and OpenAI’s $13 Billion AI Partnership

New York Times, 2023

https://www.nytimes.com/2023/04/18/technology/microsoft-openai-investment - Global AI Infrastructure Market Forecast (2024–2029)

Statista, 2024

https://www.statista.com/statistics/ai-infrastructure-market-growth - AI Chips and the Geopolitics of Semiconductor Control

CSIS (Center for Strategic & International Studies), 2023

https://www.csis.org/analysis/ai-semiconductors-geopolitics - Custom AI Chips and the End of GPU Dominance?

SemiAnalysis, 2023

https://www.semianalysis.com/p/custom-ai-chips-vs-nvidia

🔑 Keywords

- AI chip war

- custom AI silicon

- OpenAI Project Tigris

- Microsoft Maia chip

- Apple Neural Engine

- NVIDIA GPU competition

- AI infrastructure strategy

- in-house AI accelerators

- AI hardware economics

- hyperscaler AI chips

- silicon race AI

- edge AI Apple

- proprietary AI chips

- TSMC AI chip production

- cost of training AI models

- AI chip stack

- generative AI infrastructure

- AI model training hardware

- Google TPU vs custom chips

- future of AI compute